Towards a model of web browser security

One of the values of models is they can help us engage in areas where otherwise the detail is overwhelming. For example, C is a model of how a CPU works that allows engineers to defer certain details to the compiler, rather than writing in assembler. It empowers software developers to write for many CPU architectures at once. Many security flaws happen in areas the models simplify. For example, what if the stack grew away from the stack pointer, rather than towards it? The layout of the stack is a detail that is modeled away.

Information security is a broad industry, requiring and rewarding specialization. We often see intense specialization, which can naturally result in building expertise in silos, and tribal separation of knowledge create more gaps. At the same time, there is a stereotype that generalists end up focused on policy, or “risk management” where a lack of technical depth can hide. (That’s not to say that all risk managers are generalists or that there’s not real technical depth to some of them.)

If we want to enable more security generalists, and we want those generalists to remain grounded, we need to make it easier to learn about new areas. Part of that is good models, part of that is good exercises that appropriately balance challenge to skill level, part of that is the availability of mentoring, and I’m sure there are other parts I’m missing.

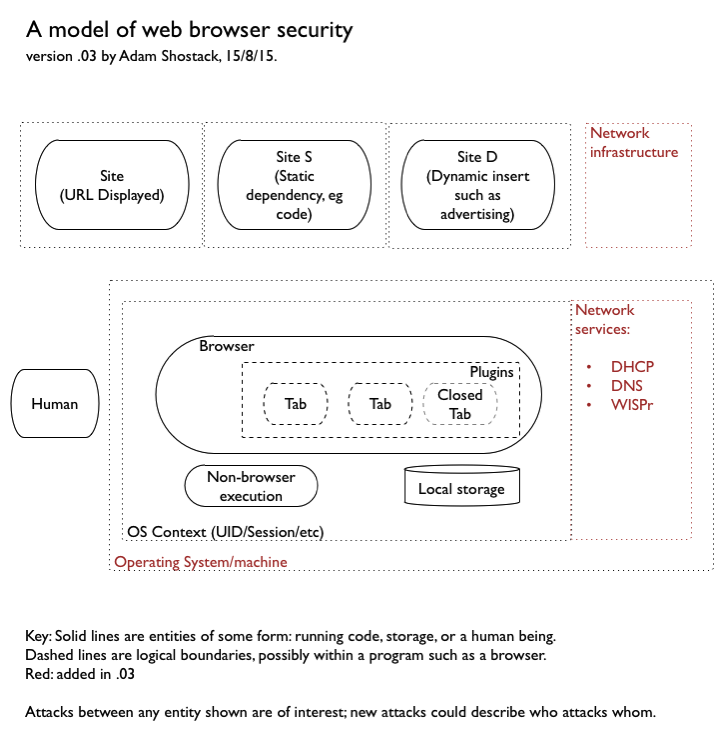

I enjoyed many things about Michael Zalewski’s book “The Tangled Web.” One thing I wanted was a better way to keep track of who attacks whom, to help me contextualize and remember the attacks. But such a model is not trivial to create. This morning, motivated by a conversation between Trey Ford and Chris Rohlf, I decided to take a stab at drafting a model for thinking about where the trust boundaries exist.

The words which open my threat modeling book are “all models are wrong, some models are useful.” I would appreciate feedback on this model. What’s missing, and why does it matter? What attacks require showing a new element in this software model?

[Update 1 — please leave comments here, not on Twitter]

- Fabio Cerullo suggests the layout engine. It’s not clear to me what additional threats can be seen if you add this explicitly, perhaps because I’m not an expert.

- Fernando Montenegro asks about network services such as DNS, which I’m adding and also about shared trust (CA Certs), which overlap with a question about supply chain from Mayer Sharma.

- Chris Rohlf points out the “web browser protection profile.

I could be convinced otherwise, but think that the supply chain is best addressed by a separate model. Having a secure installation and update mechanism is an important mitigation of many types of bugs, but this model is for thinking about the boundaries between the components.

In reviewing the protection profile, it mentions the following threats:

| Threat | Comment |

| Malicious updates | Out of scope (supply chain) |

| Malicious/flawed add on | Out of scope (supply chain) |

| Network eavesdropping/attack | Not showing all the data flows for simplicity (is this the right call?) |

| Data access | Local storage is shown |

Also, the protection profile is 88 pages long, and hard to engage with. While it provides far more detail and allows me to cross-check the software model, it doesn’t help me think about interactions between components.

End update 1]

I think iframes are a critical trust boundary for web applications that is missing right now: CSRF is an example threat and also identifies a bunch of data flows. A bunch of iframes join into a browsing context (which has a few flows that can only occur inside a browsing context). Additionally, I would call them origins instead of sites.

Also, I wonder what you think of http://devd.me/papers/websec-csf10.pdf While admittedly more focused on automatic analysis, the “logical model” discussed in earlier sections of the paper sounds quite related.

Thanks for contributing this model of web browser security.

An important boundary (not depicted in the current revision of the diagram above) exists between the components running inside and outside the browser sandboxes (e.g., Chrome renderer sandbox, Firefox content process sandbox).

Wouldn’t you want to show both the rendering and (Java)script engines and possibly execution done directly on GPU?

Hi!

Link to “Protection Profile for Web Browsers” is broken.

Please don’t forget to include this giant mess of a parser[1-2] which is the most insecure thing in a browser.

[1]: http://image.slidesharecdn.com/asystematicanalysisofxsssanitizationinwebapplicationframeworks-140704084217-phpapp01/95/a-systematic-analysis-of-xss-sanitization-in-web-application-frameworks-15-638.jpg?cb=1404463435 [link no longer works]

[2]: “A Systematic Analysis of XSS Sanitization in Web Application Frameworks” by Joel Weinberger, Prateek Saxena, Devdatta Akhawe, Matthew Finifter, Richard Shin, and Dawn Song