Lean Startups & the New School

On Friday, I watched Eric Ries talk about his new Lean Startup book, and wanted to talk about how it might relate to security.

Ries concieves as startups as businesses operating under conditions of high uncertainty, which includes things you might not think of as startups. In fact, he thinks that startups are everywhere, even inside of large businesses. You can agree or not, but suspend skepticism for a moment. He also says that startups are really about management and good decision making under conditions of high uncertainty.

He tells the story of IMVU, a startup he founded to make 3d avatars as a plugin instant messenger systems. He walked through a bunch of why they’d made the decisions they had, and then said every single thing he’d said was wrong. He said that the key was to learn the lessons faster to focus in on the right thing–that in that case, they could have saved 6 months by just putting up a download page and seeing if anyone wants to download the client. They wouldn’t have even needed a 404 page, because no one ever clicked the download button.

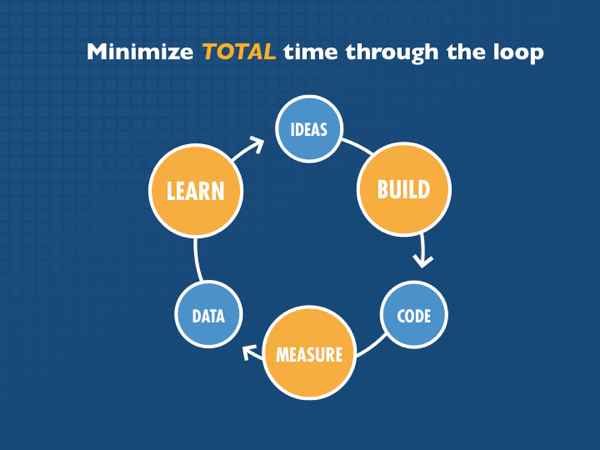

The key lesson he takes from that is to look for ways to learn faster, and to focus on pivoting towards good business choices. Ries defines a pivot as one turn through a cycle of “build, measure, learn:”

Ries jokes about how we talk about “learning a lot” when we fail. But we usually fail to structure our activities so that we’ll learn useful things. And so under conditions of high uncertainty, we should do things that we think will succeed, but if they don’t, we can learn from them. And we should do them as quickly as possible, so if we learn we’re not successful, we can try something else. We can pivot.

I want to focus on how that might apply to information security. In security, we have lots of ideas, and we’ve built lots of things. We start to hit a wall when we get to measurement. How much of what we built changed things (I’m jumping to the assumption that someone wanted what you built enough to deploy it. That’s a risky assumption and one Ries pushes against with good reason.) When we get to measuring, we want data on how much your widget changed things. And that’s hard. The threat environment changes over time. Maybe all the APTs were on vacation last week. Maybe all your protestors were off Occupying Wall Street. Maybe you deployed the technology in a week when someone dropped 34 0days on your SCADA system. There are a lot of external factors that can be hard to see, and so the data can be thin.

That thin data is something that can be addressed. When doctors study new drugs, there’s likely going to be variation in how people eat, how they exercise, how well they sleep, and all sorts of things. So they study lots of people, and can learn by comparing one group to another group. The bigger the study, the less likely that some strange property of the participants is changing the outcome.

But in information security, we keep our activities and our outcomes secret. We could tell you, but first we’d have to spout cliches. We can’t possibly tell you what brand of firewall we have, it might help attackers who don’t know how to use netcat. And we certainly can’t tell you how attackers got in, we have to wait for them to tell you on Pastebin.

And so we don’t learn. We don’t pivot. What can we do about that?

We can look at the many, many people who have announced breaches, and see that they didn’t really suffer. We can look at work like Sensepost has offered up at BlackHat, showing that our technology deployments can be discovered by participation on tech support forums.

We can look to measure our current activities, and see if we can test them or learn from them.

Or we can keep doing what we’re doing, and hope our best practices make themselves better.