Privacy Enhancing Technologies and Threat Modeling

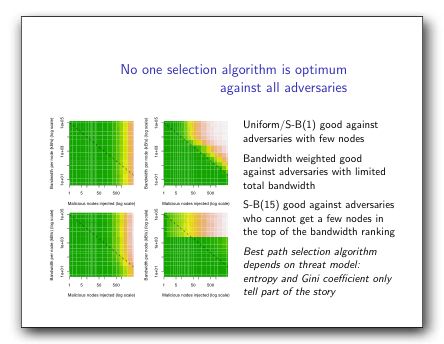

Steven Murdoch and Robert Watson have some really interesting results about how to model the Tor network in Metrics for Security and Performance in Low-Latency Anonymity Systems (or slides). This is a really good paper, but what jumped out at me was their result, which is that the right security tradeoff is dependent on how you believe attackers will behave. This is somewhat unusual in two ways: first, it implies the need for a dynamic analysis, and second, that analysis will only function if we have data.

We often apply a very static analysis to attackers: they have these capabilities and motivations, and they will stick with their actions. This paper shows a real world example of a place where as attackers get more resources, they will behave differently, rather than doing more of what they did before. So actually operating a secure Tor system requires an understanding of how certain attackers are behaving, and how they choose to attack the system at any given time.

There’s a sense in which this is not surprising, but these dynamic models rarely show up in analysis.

I also thought that paper was excellent. I’d like to see more consideration for attacker capabilities in these sorts of analyses, not just in the scope that they were writing about, but at the higher-level-view of “is an anonymity system even appropriate for this user’s threats?”

I wrote about the question of whether most Tor users have more to fear from their Tor endpoint gathering information about their cleartext usage than they do against the NSA-like global adversaries in this report [link to http://homes.esat.kuleuven.be/~lsassama/faithless-v2.pdf no longer works], which predates the two known instances of this attack (by Egerstad, and by the Colorado team) by about a year in the former case.

[I don’t particularly agree with the tone or tactics of Chris’s blog entry — for me, it’s not so much about what the researchers’ liability is, but whether their actions were ethical for Tor operators or for researchers. I think they weren’t, and I don’t think that just because there’s no law against being a jackass, that one should be a jackass. I also think that their paper offered little or nothing of value to the body of literature, and am saddened that it will likely make it much harder for people to publish papers on their experiences operating anonymity systems in the future — even if they have useful information to report beyond “we ran a Tor node for 15 days and this is what we think we saw.” The field needs to know what’s happening on the deployed systems, and there’s ways that the Colorado team could have gathered useful data without violating their users’ trust and behaving in an unethical manner. They should be called out for that, I think, rather than having sensationalist FUD triggered by their work. Of course, if it hadn’t been Chris who wrote about the legal implications of their actions, it would have been someone else, so ultimately they’re to blame for opening this can of worms in the first place.]

Len –

I’m not trying to be dense here, but I may be doing it unwittingly. I wouldhave sworn that my blog post here on EC was specifically about the ethics of such research (as opposed to legal liability, for example). At least it was intended that way.

Could it be that you are referring to the ‘tone and tactics’ of Chris Soghoian’s blog post, not mine? I only ask because I’d like to better understand your beef (if any) with what I wrote, since you seem to be a pretty thoughtful person.

Thanks.

Evolutionary models of predator/prey, host/parasite relationships may be highly applicable since attack/defense certainly co-evolve over time as assailants and targets adapt to each other’s behaviour, and to the changing environment (updated code, protocols, network characteristics).

Come to think of it, behavioural ecology may be more relevant as it looks at how organisms evolve over generations to become more or less adaptable in the face of ecological and environmental landscapes. Flexible logic of adaptation evolved at the genome level, expressed variably at the phenotypic level depending on the environment.

The artificial selection and engineering creativity of humans does put an interesting twist of complexity into play, but the general rule of nature is…stasis kills…eventually. But most information systems are only modified in response to problems, rather than proactively.

I wonder if we could design less predictable, more evolutionary Tor networks?